SurroundOcc: Multi-Camera 3D Occupancy Prediction

for Autonomous Driving

1Tsinghua University

2Tianjin University

3PhiGent Robotics

Demos

Occupancy prediction:

Generated dense occupancy labels:

Comparison with TPVFormer:

In the wild demo (trained on nuScenes, tested on Beijing street):

Abstract

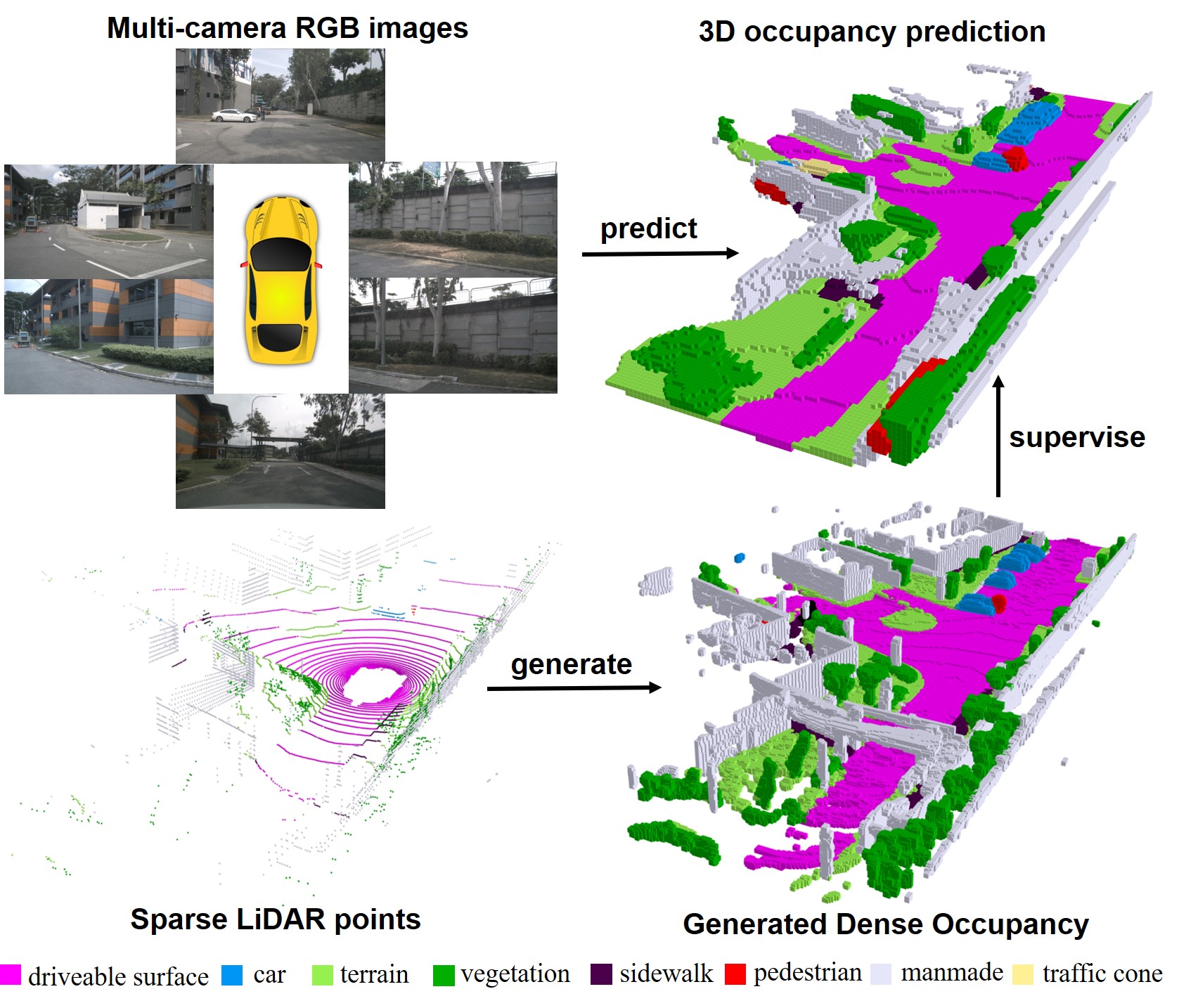

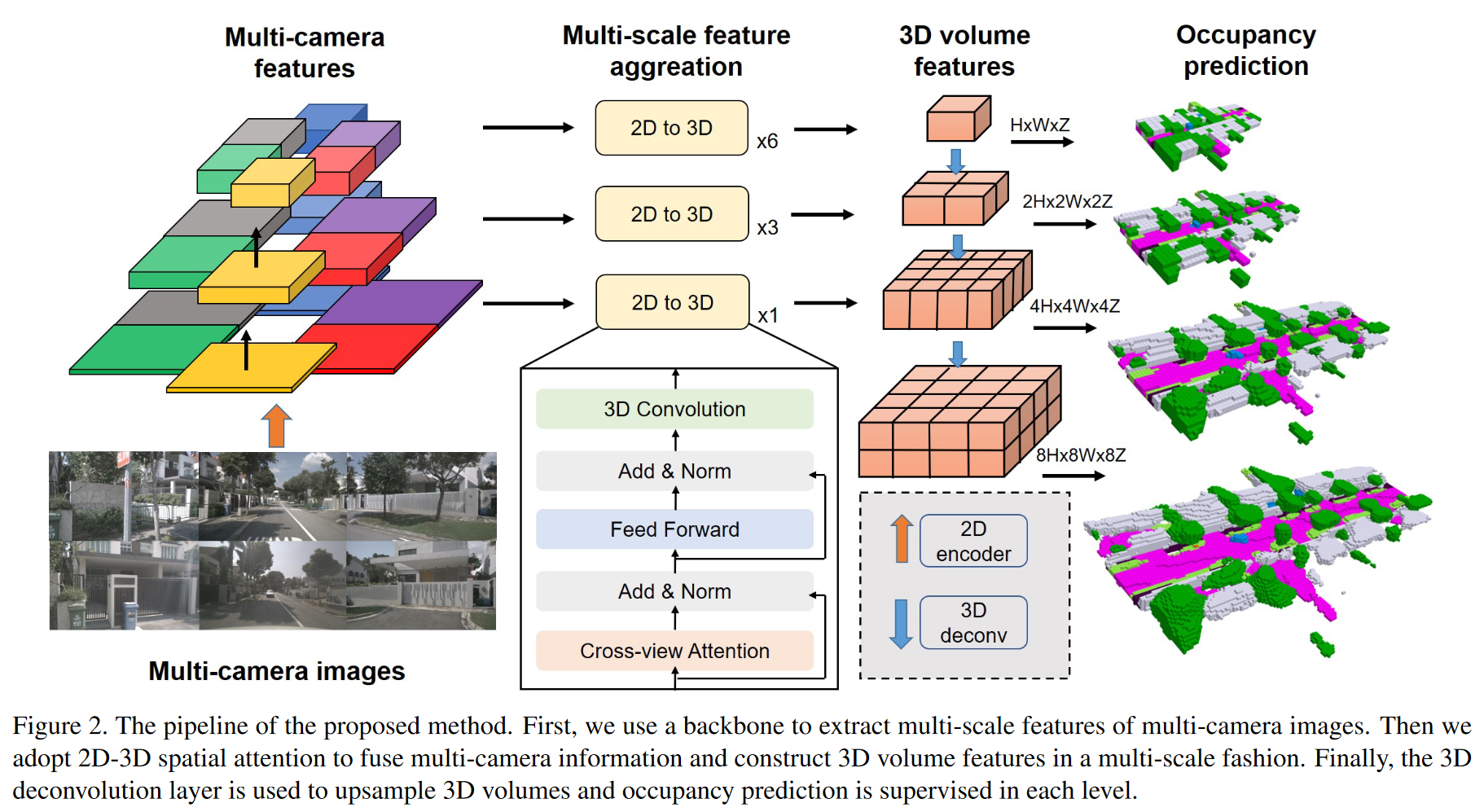

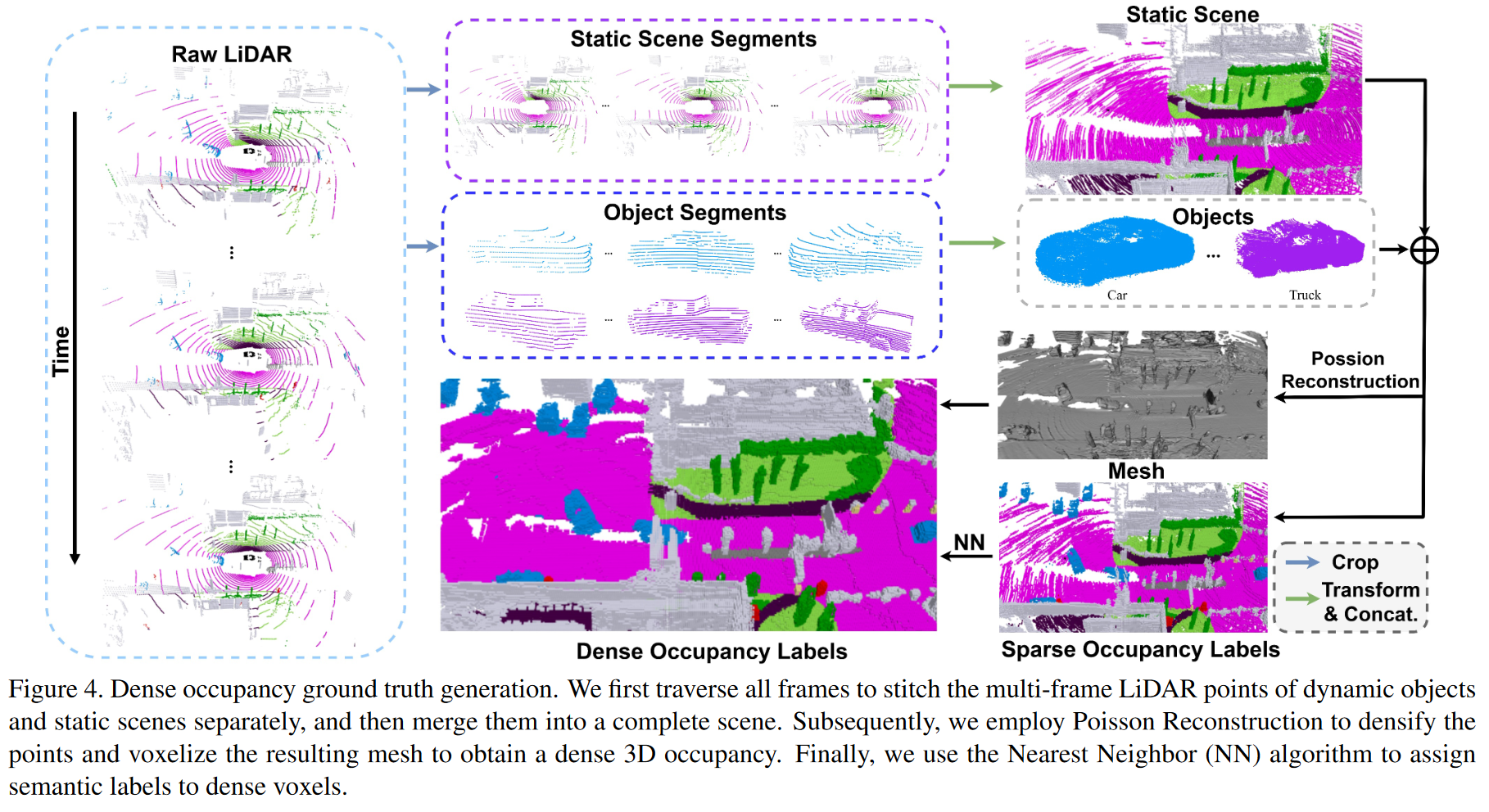

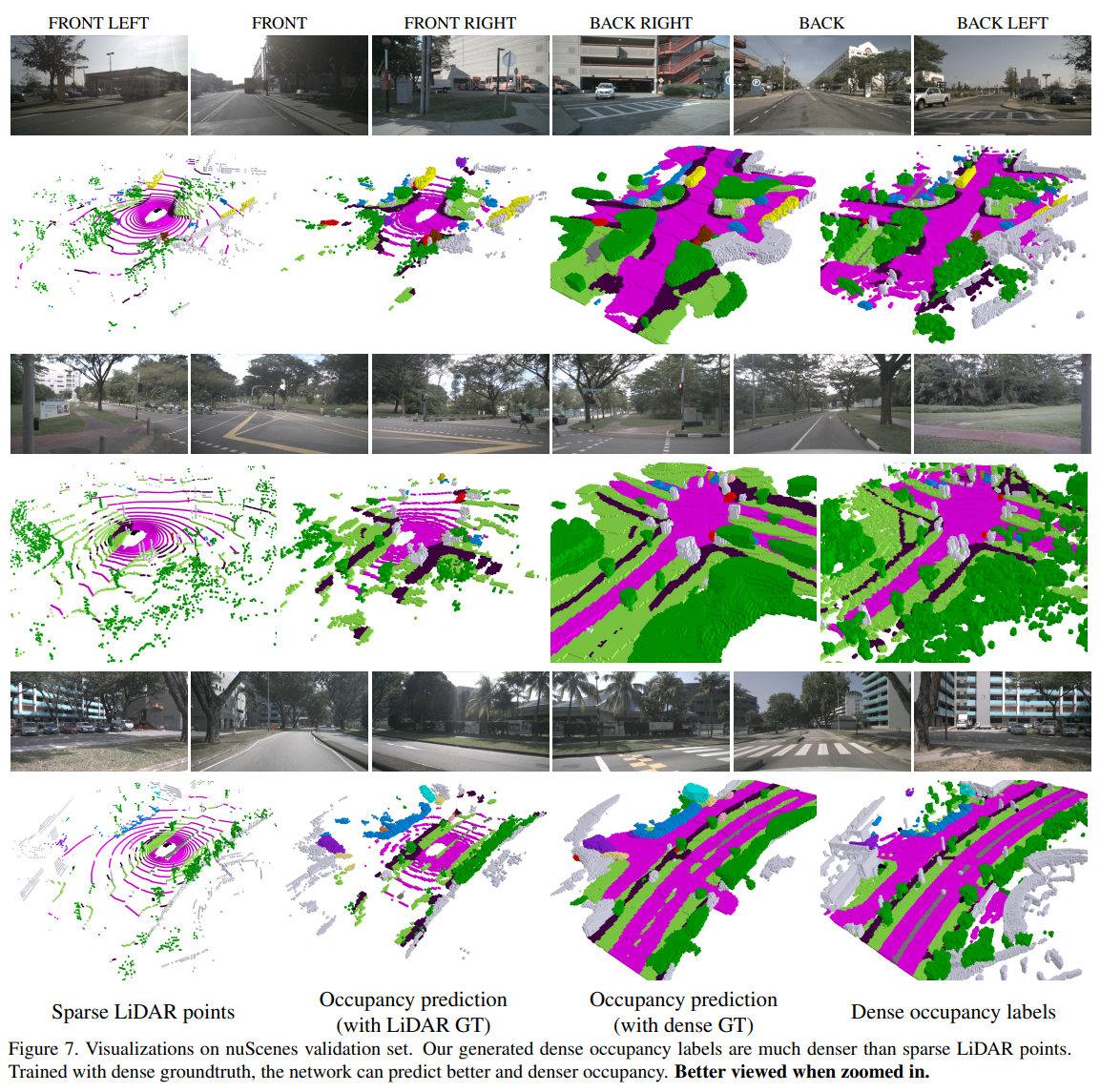

Towards a more comprehensive perception of a 3D scene, in this paper, we propose a SurroundOcc method to predict the 3D occupancy with multi-camera images. We first extract multi-scale features for each image and adopt spatial 2D-3D attention to lift them to the 3D volume space. Then we apply 3D convolutions to progressively upsample the volume features and impose supervision on multiple levels. To obtain dense occupancy prediction, we design a pipeline to generate dense occupancy ground truth without expansive occupancy annotations. Specifically, we fuse multi-frame LiDAR scans of dynamic objects and static scenes separately. Then we adopt Poisson Reconstruction to fill the holes and voxelize the mesh to get dense occupancy labels. Extensive experiments on nuScenes and SemanticKITTI datasets demonstrate the superiority of our method.

Approach

Method pipeline:

Occupancy ground truth generation pipeline:

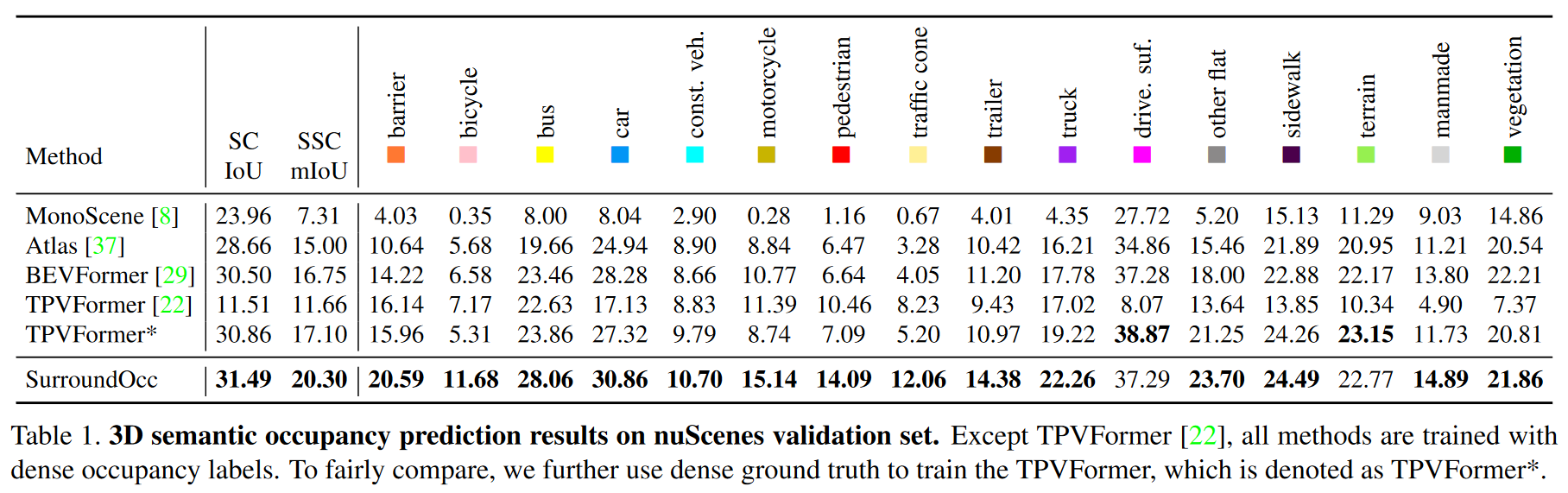

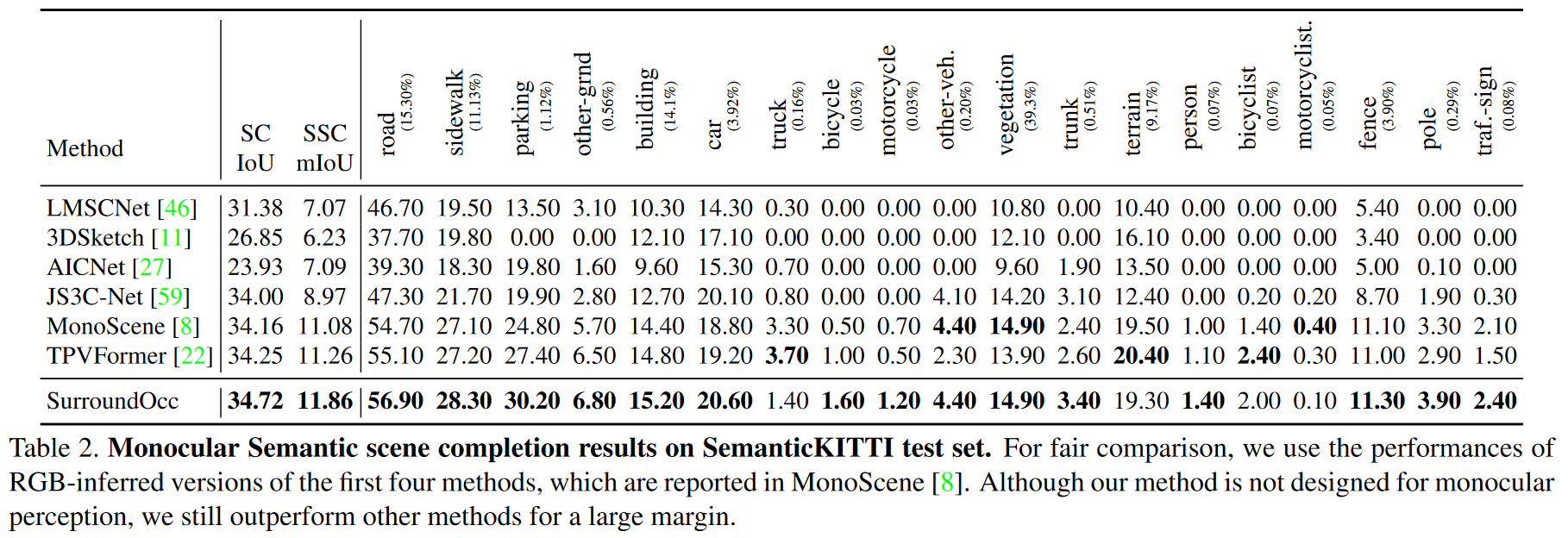

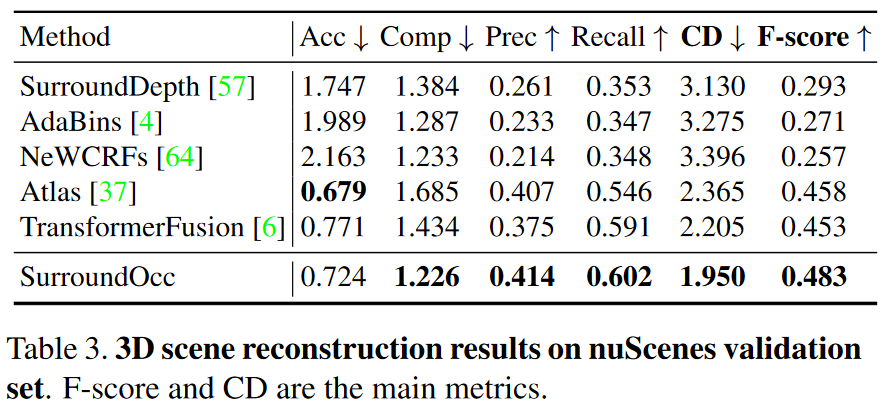

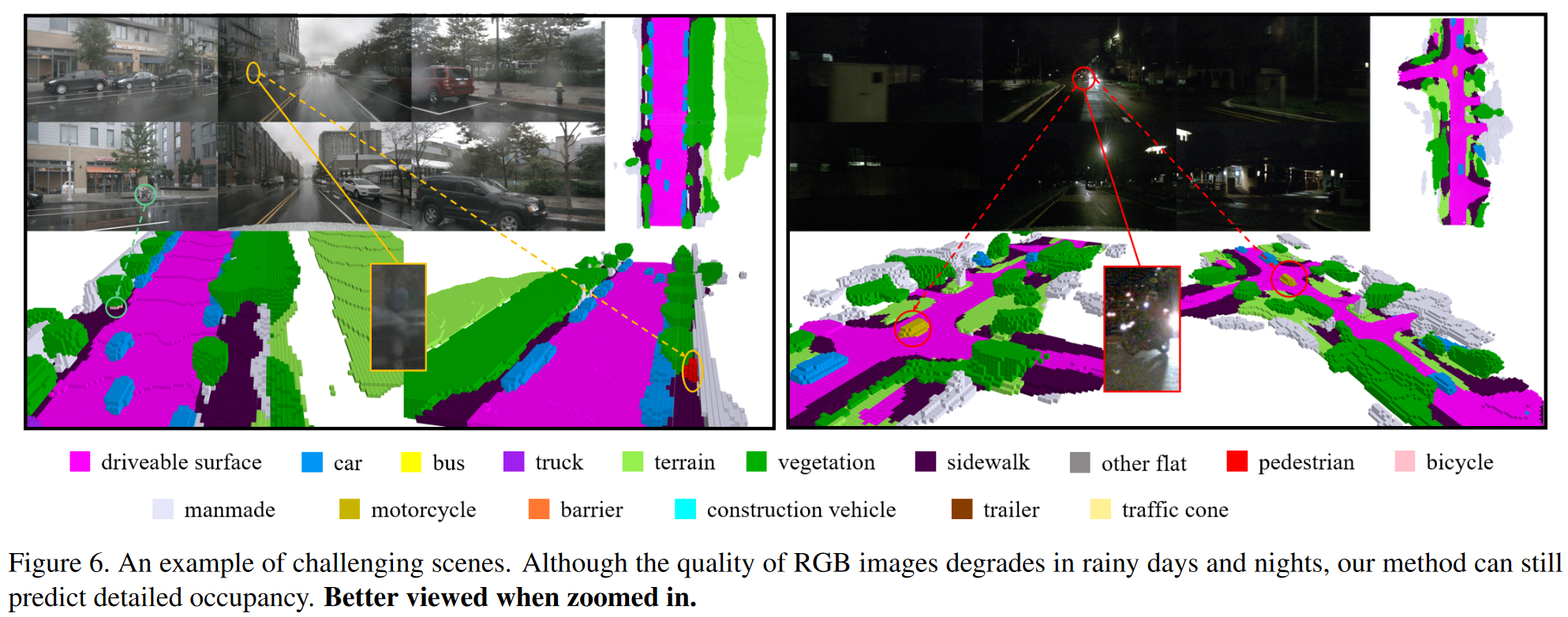

Experiments

More Visualizations

Citation

@article{wei2023surroundocc,

title={SurroundOcc: Multi-Camera 3D Occupancy Prediction for Autonomous Driving},

author={Yi Wei and Linqing Zhao and Wenzhao Zheng and Zheng Zhu and Jie Zhou and Jiwen Lu},

journal={arXiv preprint arXiv:2303.09551},

year={2023}

}